You’d be hard-pressed to find an email marketer that believes AB testing doesn’t work. However, to what degree it works and the best method to use are hot topics of debate.

What we do know is that split testing delivers a lift. In fact, a 13.2% lift on average according to a recent survey by my agency Alchemy Worx on email and brand marketers on their subject line split testing.

Key Findings:

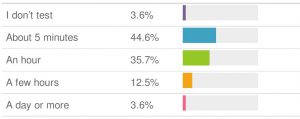

1. Although most marketers regard subject lines as the most important element affecting response, 74% of them actually spend less than an hour on their subject line tests.

“How long do you run your subject line AB split tests?”

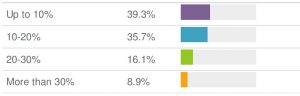

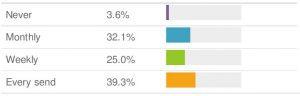

2. Despite seeing an average lift in response of 13.2% from AB split testing, 61% of marketers do not test subject lines on every campaign they send out.

“On average, what sort of improvement do you see from your subject line AB test?”

“How often do you AB split test your subject lines?”

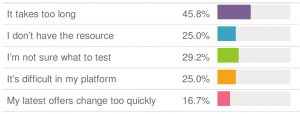

3. The number one reason for not testing with every send is “it takes too long.”

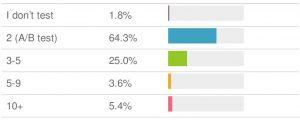

4. Only 35% of marketers test more than three subject lines when they do the test.

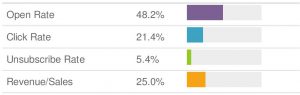

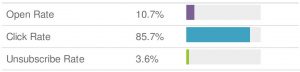

5. 48.2% of marketers regard the open rate as the most important KPI when AB testing, but 85.7% think click rate most directly correlates to revenue.

In truth, there is nothing particularly new in these survey findings, so why don’t marketers run an AB Subject Line test every time they send an email? By and large email marketing is under-resourced so execution tends to take priority over optimization.

Therein lies the dilemma: How do you measure the cost of something you’re not doing so you can make the case for doing it?

So we’ve put together some simple cost calculation benchmarks to give you an idea of how much you might be losing by not split testing all the time.

How much are you losing by not AB testing?

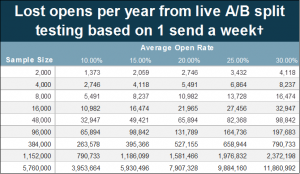

This is something each marketer or brand can (and should) calculate for their own email program and clients using their own numbers for accuracy. But we’ve produced a ballpark lookup table (below) so you can get an idea of what you may be leaving on the table.

It’s based on a few assumptions: that you send an email to your whole list once a week and the average open lift you get from split testing is 13.2%.

Although your own numbers will vary, it’s clear that split testing has an impact on the bottom line. Even with a modest 10 cents per open, your program could be losing out in thousands of dollars in additional revenue if you aren’t consistently split testing.

How much are bad subject lines costing you?

Using the same assumptions, we can also see how much leakage there is to losing subject lines if you live split test all the time.

Again, your own numbers will vary but leakage to losing subject lines (or the “testing tax” as we call it) is potentially eating into your success even if you do test often. So, why not look into your own email program to see how much you’re losing by not split testing?

Is this likely to make more people test?

Not if they continue to test the way we have always done. The problem with AB testing as we do it now is that it is not scalable. Every test requires you to build test and deploy at least one email as well as expose your customers to at least one sub-optimal subject line.

So is there a solution to these challenges? Yes. We call it “virtual testing.”

Virtual Testing, Real Results

As an agency that’s been around for 15 years, we’ve come across these challenges with split testing many times. Our answer was to develop a virtual testing platform for subject lines.

It works by creating a replica database that mimics the behaviour of your customers. This allows you to send virtual tests in seconds and instantly compare your subject lines to see which will perform best with 100% of your list.

As it only takes seconds to send a test “campaign” it removes the “it takes too long” problem of split testing for every send. In fact, you could test hundreds of different subject lines in the time it would take you to run a traditional split test!

It also means you never have to send a losing subject line to your real customers. And as you are making a judgement based on how your entire list would behave rather than a small sample, the results are more accurate than traditional split testing with small sample groups.

Better still, you can upload click and revenue data and use these instead of open rate to determine which subject lines you should send. The tool even offers recommendations on which words to change in your subject lines to improve your performance.

So if you are interested in how you can effectively and efficiently split test your subject lines all the time then try our Virtual Testing Tool for free.

Maropost for Marketing‘s Multivariate Testing feature makes AB testing easy, efficient and effective, it checks everything from subject lines, to send times—at the same time, in the same campaign.

*these calculations are based on the assumption that the current program has no split testing and send one email a week to the entire list. The lift is an average taken from the survey of 13.2%

† these calculations are based on the assumption that the current program split tests every campaign and sends one email a week to the entire list. The lift is an average taken from the survey of 13.2%.

About Dela Quist

Dela Quist is the founder and CEO of UK-based Alchemy Worx. He estimates that he’s clocked over 20,000 hours thinking about email. Clients include Getty Images, Hotels.com, Sony Playstation, and Tesco.

Dela Quist is the founder and CEO of UK-based Alchemy Worx. He estimates that he’s clocked over 20,000 hours thinking about email. Clients include Getty Images, Hotels.com, Sony Playstation, and Tesco.

[sc name=”guide-DoingMoreWithData”]

Need to chat about your mobile marketing strategy?

More than 10,000 marketers use Maropost to engage with their prospects and customers through emails, SMS, social media and more. We’re here to help you growing your business!

Chat Now